Nov 4, 2019 4:02:47 PM

Why QA is Critical for Machine Learning Models

Machine learning (ML) can be defined as a subset of Artificial Intelligence (AI) providing computer systems the ability to automatically learn from data provided to perform specific tasks. This eliminates the need of providing explicit instructions for the systems to function on a regular basis.

Traditional ML methodology has focused more on the model development process. This involved selecting the most appropriate algorithm for a given problem. In contrast, the software development process focuses on both development and testing of the software.

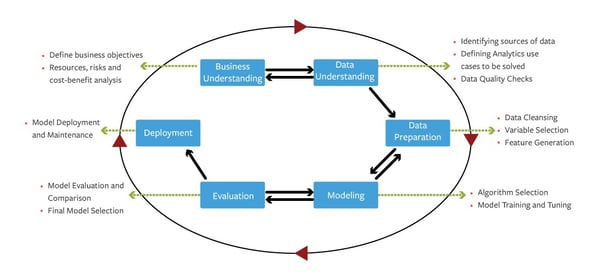

1: Cross Industry Standard Process for Data Mining (CRISP-DM)

Click to EnlargeTesting in relation to ML model development focuses more on the model’s performance in terms of its accuracy. This activity is generally carried out by the data scientist developing the model. These models have significant real-world implications as they are crucial for decision making at the highest level.

Therefore, from a quality assurance perspective, a rigorous testing process is necessary to ensure the stability and efficient performance of these models. This involves cutting down on biases in their development, to ensure accurate data driven decisions.

The Need for Continuous Validation of ML Models

There are various hurdles to overcome in the development of ML models. Some of the challenges that mandate the need for a QA validation team and the process of on-going validation are specified below:

Therefore, it is essential to ensure the presence of systems to monitor and improve upon ML models even after deployment.

Part 2 will cover elements of Black Box Testing in the context of AI and ML models.

Read more about Sasken's expertise providing efficient Digital Testing strategies.

Nov 4, 2019 4:02:47 PM

Why QA is Critical for Machine Learning Models

Machine learning (ML) can be defined as a subset of Artificial Intelligence (AI) providing computer systems the ability to automatically learn from data provided to perform specific tasks. This eliminates the need of providing explicit instructions for the systems to function on a regular basis.

Traditional ML methodology has focused more on the model development process. This involved selecting the most appropriate algorithm for a given problem. In contrast, the software development process focuses on both development and testing of the software.

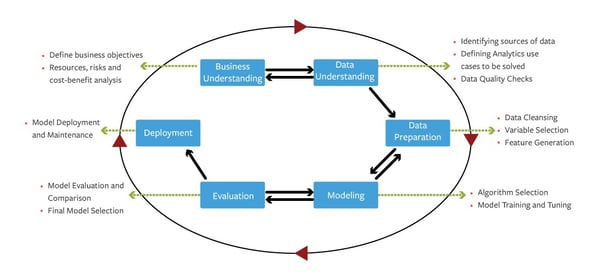

1: Cross Industry Standard Process for Data Mining (CRISP-DM)

Click to EnlargeTesting in relation to ML model development focuses more on the model’s performance in terms of its accuracy. This activity is generally carried out by the data scientist developing the model. These models have significant real-world implications as they are crucial for decision making at the highest level.

Therefore, from a quality assurance perspective, a rigorous testing process is necessary to ensure the stability and efficient performance of these models. This involves cutting down on biases in their development, to ensure accurate data driven decisions.

The Need for Continuous Validation of ML Models

There are various hurdles to overcome in the development of ML models. Some of the challenges that mandate the need for a QA validation team and the process of on-going validation are specified below:

Therefore, it is essential to ensure the presence of systems to monitor and improve upon ML models even after deployment.

Part 2 will cover elements of Black Box Testing in the context of AI and ML models.

Read more about Sasken's expertise providing efficient Digital Testing strategies.